Sanjeev

Data Engineer

About Me

I am a results-driven Data Engineer with 9+ years of proven expertise in designing and implementing scalable data solutions.

My technical proficiency spans SQL, Python, dbt, and cloud platforms, complemented by a strong foundation in data warehousing, ETL pipeline development, and analytics architecture.

Throughout my career, I have successfully delivered high-impact projects that optimize data infrastructure, improve operational efficiency, and enable data-driven decision-making across organizations.

My approach combines technical excellence with collaborative problem-solving, ensuring that analytical solutions are not only technically sound but also aligned with business objectives and deliver measurable value.

I am committed to leveraging data engineering best practices to transform complex business challenges into actionable insights.

Resume

Experience

Data Engineer 4

Kraft Analytics Group

● Engineered a unified dbt framework on Snowflake, replacing 45+ monolithic SQL views and disparate JSON orchestrations; this transformation slashed client onboarding timelines from ~9 months to 2 sprints.

● Architecting a custom dbt parser to enforce Object-Oriented Programming (OOP) principles; this initiative targets a further reduction in implementation time to just 2 days by abstracting business logic into composable instances.

● Optimized historical seat-level transaction pipelines by refactoring FACT table logic, reducing processing runtime by 75% and significantly lowering compute costs.

● Developed a cross-client internal Python package utilizing Prefect for event-based orchestration, reducing manual intervention and cutting average pipeline runtime by 30%.

Senior Data Engineer

Klearnow.AI

● Led the data engineering team on re-designing a PySpark pipeline that loads shipment & merchandise data into Redshift warehouse’s one-big-table via a multi-node EMR cluster.

● Collaborated with the AI team in building a data pipeline used for real time shipment tracking and analysis, by ingesting data extracted from third party shipment contracts.

● Reduced shipment tracking dashboard latency from 48 mins to 17mins, by identifying and resolving bottlenecks and inefficient code practices across the entire data pipeline.

● Designed & developed HubSpot API pipeline in Python to extract customer interaction data like contacts, emails, calls, notes, etc. and harness new avenues of insight. This increased the customer retention by 18%.

● Reduced CPU utilization on the data warehouse by 22% by introducing materialized views and data validation before writing data into the respective schemas. This also fixed a multitude of data quality issues such as duplicacy & data inaccuracy.

Data Engineer

AstraZeneca

● Engineered a data pipeline that ingests global rare disease clinical trials data that enhanced the R&D team’s drug development, keeping data accuracy as the primary business goal while avoiding data redundancy.

● Developed a pipeline that migrated study & patient data from multiple sources into our Snowflake warehouse and in turn to our Qlik dashboards through AWS EC2 and S3 buckets. This opened the Clinical Trials to a larger population of patients based on the insight generated by the symptomology dashboards.

● Spearheaded a cross-functional data wrangling & intergrity effort to predict deviation from other similar clinical trials by extracting data from various sources.

Graduate Research Assistant

Syracuse University

● Designed and containerized a scalable learning platform; 800+ students registered in the first semester of launch

Data Analytics Intern

Marathon Energy

● Designed and implemented data pipelines with Python-Selenium web-scraper for revenue driving teams to improve data governance. These pipelines fueled PowerBI dashboards with data extracted from sources like National Grid, and exported it into our local servers. This also improved data refresh rate by 94%.

Data Analyst

Latentview Analytics

● Surveyed stockholders, conceived ideas as a part of the data science team that predicts quarterly customer conversion rate and propose strategies to improve it.

● Established in-house methods to extract results from end-to-end descriptive analysis that administered Ad placement on the customer’s website which increased sales and customer retention by 12%.

Data Engineer

Cognizant Technology Solutions

● Led a cross-functional team that utilizes international customer transaction and global monetary data to enhance data accuracy & compliance through data warehousing techniques & complex queries.

● Reduced service downtime by implementing a caching system that helped warm up databases with daily foreign exchange data on service startup by 85 minutes per day.

Skills

Languages

SQL, Python, dbt, PySpark, R

Databases & Tools

Snowflake, Redshift, Hive, AWS - EC2, S3, EMR, RDS, Power BI, Tableau, Google Analytics, Docker

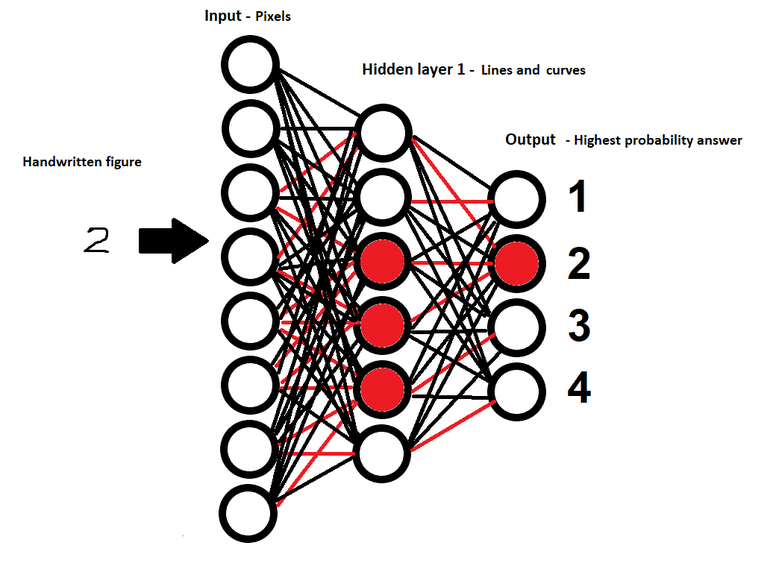

Data Analysis

Pandas, Numpy, Scikit-Learn, matplotlib, Shiny, ggplot2, Flask,

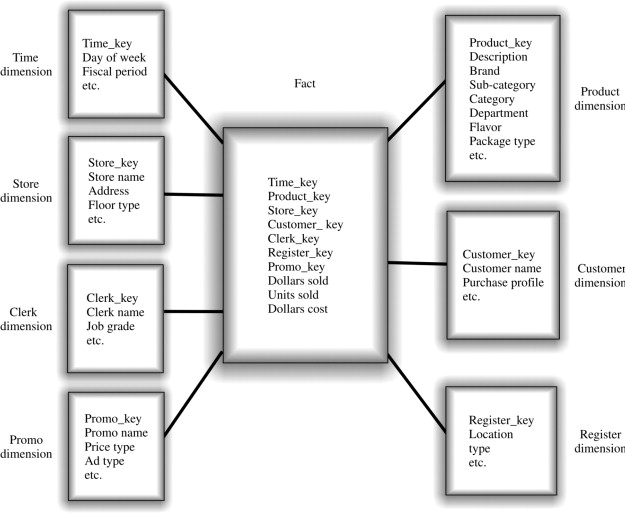

Data Modeling & ETL

SSIS, OLTP, OLAP, Snapshot, KPI, OLTP, OLAP, dbt

Version Control & Collaboration

Git, Circle CI, JIRA, Confluence

Testimonials

Education

Master of Science in Applied Data Science

Syracuse University

Bachelor of Engineering in Electrical & Electronics Engineering

Anna University

Get In Touch

Copyright © All rights reserved | This template is made with by Colorlib